Key Claims

- Displacement can occur even without full automation. AI can cost you your job without fully automating your role: once it amplifies enough of your coworkers or clients, compression effects can displace roles throughout the hierarchy.

- Your risk depends on your tasks, not your job title: Risk is highly dependent on your task structures, hierarchy, and organizational context. Two people with the same job title can face displacement timelines that vary by years.

- There is little cost to preparation: Over-preparing for displacement that never arrives is relatively cheap, while being underprepared can significantly affect your economic agency, especially as AI rapidly increases in capabilities.

- Policy levers need to be implemented: This is a technology unlike any prior automation trends. In at-will employment, firms have little incentive to retain roles that add little value compared to purchasing AI compute; without active guardrails, mass unemployment will have significant effects upon the labor market.

Introduction

Researchers at leading AI labs predict that we will reach AGI (Artificial General Intelligence) sometime within the next 3-12 years. Politicians, business executives, and AI forecasters make similar predictions. AGI, by definition, means systems that are more capable, cheaper, and faster than any human at any cognitive labor task. These systems will amplify individual productivity in the near term, but they also have the capability to displace human workers.

If you're skeptical of that claim, you have good reason to be. "Automation will take your job" has been predicted before: by 19th century Luddite textile workers, by economists warning about tractors in the 1920s, by analysts predicting the end of bank tellers when ATMs arrived. Those predictions were mostly wrong. New jobs emerged, transitions stretched over decades, and human adaptability proved more robust than forecasters expected. Why should AI be any different?

Three factors separate AI from previous waves: speed, breadth, and the economics of cognitive labor. AI capabilities are increasing much faster than the rate at which we can upskill, and these systems aim to replace the function of human intelligence in many cognitive tasks. But AI does not need to reach "general intelligence" levels of capability to disrupt the labor market, and we are already seeing it happen in white-collar roles.

Displacement occurs under two main scenarios:

- Complete displacement: Your entire function or service can be replaced by AI. We're seeing this happen to low-level design work, transcription software, photographers, models, voice actors, etc. Even if your job is split into many tasks, you are still displaced because your colleagues or clients can replace your entire service at a low marginal cost by prompting AI.

- Gradual displacement: This is more common, as most white-collar jobs involve diverse tasks that vary in complexity and time horizons. AI will automate portions of your task set, which reduces the organizational need that originally justified your role.

Naturally, AI capabilities are compared to the human brain, and in many respects they are far off from matching the strengths of our working minds: tackling complex problems with incomplete information, continual learning, navigating emotions or relationships, and long-term coherent agency. Your role may not be displaced by AI providing the entire service of Data Analyst III, but it might soon be able to do enough of your tasks that your organization no longer needs a full-time person in your position.

Don't Lose Your Job is a modeling platform that measures gradual displacement in white-collar roles. The questionnaire captures your job's task structure, domain characteristics, hierarchy position, and organizational context, then models those layers of friction and amplification against trends of AI capability growth from METR data. The model makes several assumptions about these forces, but you can (optionally) tune these coefficients in the Model Tuning section to see the effects of your own assumptions.

The model does not forecast potential government or business policies that might mandate human involvement in certain tasks or slow AI adoption. Beyond individual planning, this tool aims to inform policy discussions about maintaining human agency and oversight in the labor market.

The model is open-source. You can build your own versions by visiting github.com/wrenthejewels/DLYJ.

Related Resources

- Rudolf Laine and Luke Drago's The Intelligence Curse (or their shorter article in TIME)

- The AI Futures Model, the leading model for capability forecasting

- Leading research organizations: Epoch, Cosmos Institute, METR, Mercor, and BlueDot Impact

Why This Time Might Be Different

The history of automation anxiety is largely a history of false alarms. Understanding why previous predictions failed, and why this time the underlying dynamics may have genuinely shifted, is essential to calibrating how seriously to take current forecasts.

The track record of automation fears

Economists discuss displacement anxiety with the "lump of labor" fallacy, which assumes there's a fixed amount of work to be done such that automation necessarily reduces employment; historical evidence shows this assumption is wrong.

In the early 19th century, Luddite weavers destroyed textile machinery, convinced that mechanical looms would eliminate their livelihoods. They were partially right, as hand weaving did decline, but textile employment overall expanded as cheaper cloth created new markets and new jobs emerged around the machines themselves.

A century later, agricultural mechanization triggered similar fears. In 1900, roughly 40% of American workers labored on farms. By 2000, that figure had dropped below 2%. Yet mass unemployment never materialized. Workers moved into manufacturing, then services, then knowledge work. The economy absorbed displaced agricultural workers over decades, creating entirely new categories of employment that didn't exist when tractors first arrived.

The ATM story is also relevant. ATMs spread in the 1970s-80s, and many predicted the end of bank tellers. Instead, the number of bank tellers actually increased. ATMs reduced the cost of operating branches, so banks opened more of them, and tellers shifted from cash handling to sales and customer service. The job title persisted even as the job content transformed.

The mechanism is straightforward: automation increases productivity, which reduces costs, increases demand, and creates new jobs, often in categories that didn't exist before. Spreadsheets enhanced accountants to perform more sophisticated financial analysis, and it created demand for analysts who could leverage the new tools, rather than displacing analysis as a profession. Markets are adaptive, and new forms of valuable work consistently emerge.

What's structurally different about AI

AI-driven displacement differs from historical precedents in ways that may compress generational transitions into years.

Speed of capability growth. AI capabilities are increasing exponentially. Skill acquisition, organizational change, and policy response operate on much slower cycles, so capability growth can outpace the rate at which workers and institutions adapt. Even if AI-driven wealth is eventually redistributed, many current workers can still fall through the gap during early waves of displacement. If this happens, you may have fewer opportunities for outlier success than ever before.

Breadth of application. Tractors replaced farm labor, ATMs replaced cash-handling, and spreadsheets replaced manual calculation. Each previous automation wave targeted a relatively narrow domain. AI targets a wide range of cognitive work: writing, analysis, coding, design, research, communication, planning. There are fewer adjacent cognitive domains to migrate into when the same technology is improving across most of them at once, so the traditional escape route of "move to work that machines can't do" becomes less available.

The economics of cognitive vs. physical labor. Automating physical tasks required capital-intensive machinery: factories, tractors, robots. The upfront costs were high, adoption was gradual, and physical infrastructure constrained deployment speed. Typewriters, computers, and the internet enhanced our cognitive abilities by seamlessly transferring the flow of information. AI replaces cognitive labor itself through software, with marginal costs approaching zero once the systems are trained. A company can deploy AI assistance to its entire workforce in weeks, not years, and some of that "assistance" has already replaced entire job functions. The infrastructure constraint that slowed previous automation waves doesn't apply in the same way.

The "last mile" problem is shrinking. Previous automation waves often stalled at edge cases. Machines could handle the 80% of routine work but struggled with the 20% of exceptions that required human judgment, which created stable hybrid roles where humans handled exceptions while machines handled volume. AI's capability profile is different, and each model generation significantly expands the fraction of edge cases it can handle, so "exceptions only" roles look more like a temporary phase than a permanent adjustment.

No clear "next sector" to absorb workers. Agricultural workers moved to manufacturing, manufacturing workers moved to services, and service workers moved to knowledge work. Each transition had a visible destination sector that was growing and labor-intensive. If AI automates knowledge work, what's the next sector? Some possibilities exist (caregiving, trades, creative direction), but it's unclear whether they can absorb the volume of displaced knowledge workers or whether they pay comparably.

Is there a case for continued optimism?

The historical pattern may not completely break, as we'll always redefine "work,":

New job categories we can't predict. The most honest lesson from history is that forecasters consistently fail to anticipate the jobs that emerge. "Social media manager" wasn't a job in 2005. AI is already creating new roles: prompt engineers, AI trainers, AI safety researchers, human-AI collaboration specialists, AI ethicists, AI auditors. As AI capability grows, more categories will likely emerge around oversight, customization, integration, and uniquely human services that complement AI capabilities. Our imagination genuinely fails to predict future job categories, and some current workers will successfully transition into AI-related roles that don't yet have names.

- Counterargument: Historical job creation happened because automation couldn't do everything; machines handled physical labor, so humans moved to cognitive labor. If AI handles cognitive labor, what's the structural reason new human-specific jobs must emerge? The optimistic case relies on "something will come up" without identifying the mechanism. New jobs may also require different skills, be located in different geographies, or pay differently than displaced jobs. New jobs will emerge, and some jobs that require strategic thinking will stay, but the pace of displacement is occurring faster than the new economy can stabilize. Even without AI, it is exceedingly difficult to switch to a more "strategic" or high-level role.

Human preferences for human connection. Some services stay human by choice, even if AI can do them. People may still want therapists, teachers, doctors, and caregivers in the loop. Human connection carries value AI cannot replicate. We see this in practice: many shoppers seek humans for complex purchases, in-person meetings matter for relationships despite videoconferencing, and customers escalate from chatbots to humans for emotional support or tricky problems. Roles rooted in care, creativity, teaching, and relationships may keep human labor even when AI is technically capable.

- Counterargument: This argument is strong, and it will likely serve the last remaining jobs that exist (without the implementation of policy proposals). But preferences often yield to economics: people might prefer human-crafted furniture but buy IKEA, and they might prefer human customer service but use chatbots when the alternative is longer wait times, so price pressure can push AI adoption even where human service is preferred. Preferences may also shift generationally; young people who grow up with AI assistants may have different comfort levels than those who didn't. And many knowledge work jobs don't involve direct human connection (data analysis, coding, research), so this argument doesn't protect them.

Organizational friction is real. Real-world organizations are far messier than economic models suggest. Bureaucratic inertia, change management challenges, legacy systems, regulatory constraints, and organizational dysfunction slow AI adoption dramatically. The timeline from "AI can do this" to "AI has replaced humans doing this" could be much longer than capability curves suggest.

- Counterargument: Friction slows adoption but doesn't stop it; competitive pressure forces even reluctant organizations to move, early adopters put holdouts under cost pressure, and organizational dysfunction can delay change while also prompting faster layoffs. Friction does buy time, but it is not a long-term shield against displacement.

Regulatory protection. The EU AI Act and similar frameworks could mandate human oversight in high-stakes domains. Some jurisdictions may require human involvement in medical diagnosis, legal decisions, hiring, or financial advice regardless of AI capability. Professional licensing boards may resist AI encroachment.

- Counterargument: There is no strong counterargument to this section: we will need policy implementations at the state and corporate levels to keep humans involved in, and benefiting from, task completion.

The Economics of Replacement

Automation decisions are driven by capabilities and economic constraints. A firm won't replace you with AI just because it can do your job; they'll replace you when the economics favor doing so.

The basic decision calculus

When a firm considers automating a role, they're implicitly running a cost-benefit analysis that weighs several factors:

- Labor cost. Higher-paid roles create stronger economic incentive for automation. A $200,000/year senior analyst represents more potential savings than a $50,000/year entry-level assistant. This is why knowledge workers face higher automation pressure than minimum-wage service workers, despite the latter seeming more "automatable" in some abstract sense.

- Volume and consistency. Tasks performed frequently and predictably are more attractive automation targets than rare, variable tasks. The fixed costs of implementing automation (integration, testing, change management) amortize better across high-volume work.

- Error tolerance. Domains where mistakes are cheap favor aggressive automation. Domains where errors are catastrophic (medical diagnosis, legal advice, safety-critical systems) favor slower adoption and human oversight. Your role's error tolerance affects how willing your organization is to accept AI imperfection.

- Implementation cost. Beyond the AI itself, automation requires integration with existing systems, workflow redesign, training, and change management. These costs vary enormously by organization. A tech company with modern infrastructure faces lower implementation costs than a legacy enterprise with decades of technical debt.

The decision simplifies to: Is (labor cost × volume × quality improvement) greater than (implementation cost + ongoing AI cost + risk of errors)? When this equation tips positive, automation becomes economically rational regardless of any abstract preference for human workers.

Weighing new options for intelligence

A common misconception is that AI must outperform humans to threaten jobs. AI only needs to be good enough at a low enough price, and for enough of your tasks.

Consider two scenarios:

- Scenario A: A human produces work at 95% quality for $100,000/year.

- Scenario B: AI agents produce work at 85% quality for $10,000 worth of compute a year, with the quality increasing and the cost decreasing every year thereafter.

For many business contexts, the 10% quality drop is acceptable given the 90% cost reduction. This is especially true for work that does not need to be highly reliable on its first prompt, as a senior-level employee can direct agents to draft multiple edits of tasks faster than a feedback loop with lower-level employees. The quality threshold for automation is often lower than workers assume.

This explains why displacement often begins with lower-level roles. Entry-level work typically has higher error tolerance (seniors review it anyway), lower quality requirements (it's meant to be refined upstream), and lower absolute labor costs (making the implementation investment harder to justify for any single role, but easier when aggregated across many juniors).

How firms will deploy agents

A common objection to AI displacement forecasts is that current models have limited context windows and can't hold an entire job's worth of knowledge in memory. This misunderstands how AI systems are actually deployed. Organizations don't replace workers with a single model instance, they deploy fleets of specialized agents, each handling a subset of tasks with tailored prompts, tools, and retrieval systems. All of your knowledge about your role cannot fit into one model's context window, but it can be dispersed across system prompts, vector databases, and other systems that document the data of your role. The aggregate system can exceed human performance on many tasks even when individual agents are narrower than human cognition.

This architecture mirrors how organizations already function. No single employee holds complete knowledge of all company processes; information is distributed across teams, documentation, and institutional memory. As agentic systems mature, the orchestration becomes more sophisticated; agents can spawn sub-agents, maintain persistent memory across sessions, and learn from feedback loops.

Work will become more digitized through meeting transcripts, emails, project trackers, and saved drafts, and agents will gain a clearer view of how tasks are actually carried out inside an organization. Over time, this helps the system understand the practical steps of a role rather than just the final result.

These agents will learn from these accumulated examples, and they can begin to handle a larger share of routine or well-structured tasks. They also improve more quickly because new work records continuously update their understanding of how the organization prefers things to be done. This reduces certain forms of friction that once made roles harder to automate, such as tacit knowledge or informal processes that previously were not recorded.

Competitive dynamics and the adoption cascade

Once one major player in an industry successfully automates a function, competitors face pressure to follow. This creates an adoption cascade:

- Early adopters deploy AI in a function, reducing their cost structure.

- Competitors observe the cost advantage and begin their own automation initiatives.

- Industry standard shifts as automation becomes necessary for competitive parity.

- Holdouts face pressure from investors, boards, and market forces to automate or accept structural cost disadvantages.

This dynamic means that your firm's current attitudes toward AI adoption may not predict your long-term risk. A conservative organization that resists automation today may be forced to adopt rapidly if competitors demonstrate viable cost reductions. Think about both what your company thinks about AI and how it will respond once other businesses use it.

The role of investor expectations

Public and venture-backed companies face additional pressure from capital markets. Investors increasingly expect AI adoption as a signal of operational efficiency and future competitiveness. Earnings calls now routinely include questions about AI strategy, and companies that can demonstrate AI-driven productivity gains are rewarded with higher valuations.

The reverse is also true: companies that resist automation may face investor pressure, board questions, and competitive positioning concerns that push them toward adoption faster than they would otherwise choose.

Translating AI Capabilities to Your Displacement Timeline

Measuring AI progress

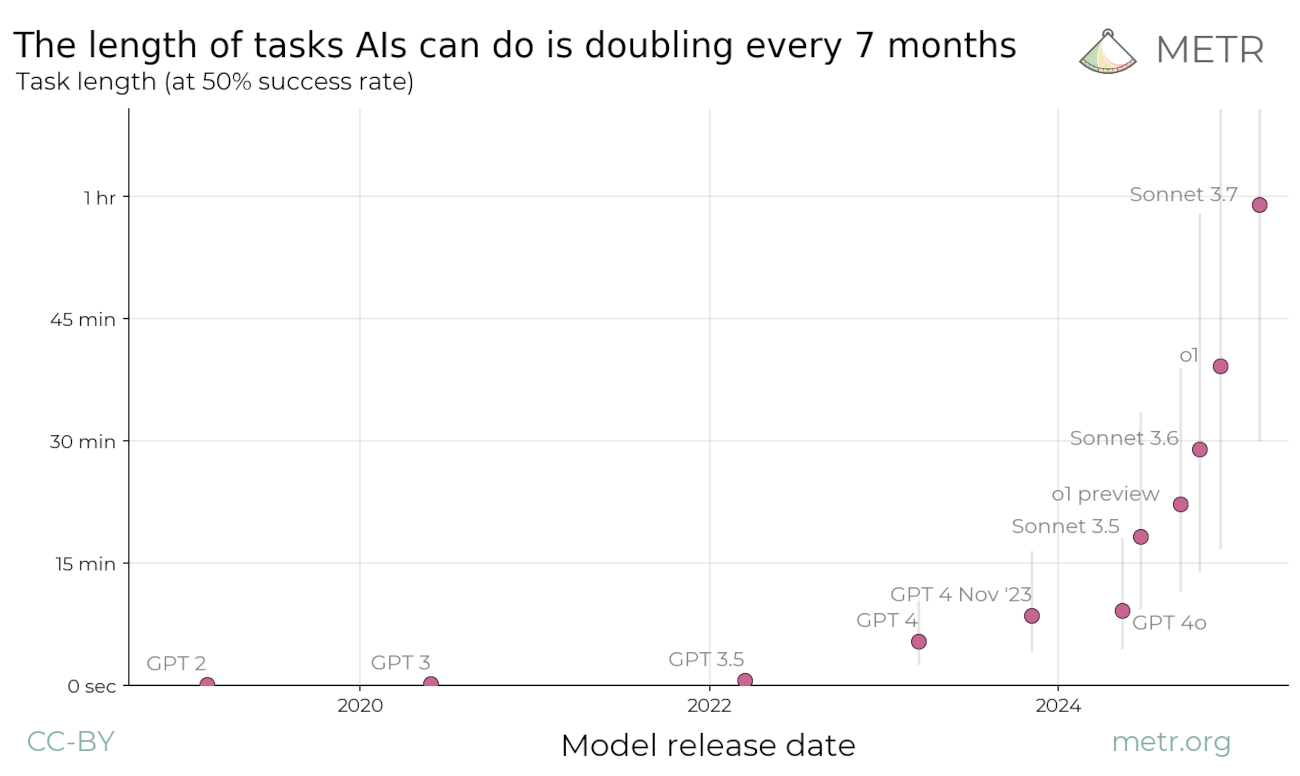

AI research organization METR measures AI capabilities by the length of software engineering tasks models can autonomously complete. Even when measured against different success rates, models have demonstrated exponential growth since the launch of public-facing models, with a doubling time of roughly seven months. Extrapolating from this trend at the 50% success rate threshold, it will be less than 5 years before models can autonomously complete tasks that take humans weeks or months.

From benchmarks to your job

METR's benchmarks measure software engineering tasks, but displacement happens across every knowledge domain. Code is structured, digital, and verifiable, which makes software a leading indicator. Other cognitive domains will likely follow for similar task-completion times, but different domains face different translation delays.

Work that resembles software (digital, decomposable, with clear success criteria) will track closely with METR benchmarks. Work involving tacit knowledge, physical presence, or relationship-dependent judgment will lag behind. The model handles this through domain friction multipliers. Software engineering roles face minimal friction, while legal, operations, and traditional engineering roles face higher friction due to regulatory constraints, liability concerns, and less structured workflows.

How we calibrate the model

The questionnaire captures four factors that determine when AI displacement becomes likely for your specific role:

- Task structure: Highly decomposable, standardized work concentrates in shorter task buckets that AI clears first. Complex, context-dependent work concentrates in longer task buckets that AI reaches later.

- Domain alignment: Digital, data-rich workflows align well with AI's training domain. Work involving physical presence, relationship judgment, or uncodified expertise translates with friction.

- Hierarchy position: Entry-level roles face maximum compression vulnerability, while senior roles face reduced vulnerability plus longer implementation delays (as AI is less likely to assume their strategic work).

- Organizational context: Your timeline also depends on employer-specific friction. Regulated sectors may move more slowly at first, then quickly once competitive pressures become apparent. Startups with minimal technical infrastructure can deploy and experiment with agents more quickly, while enterprises with decades worth of existing systems will see more barriers to deploying effective agents. A highly capable AI that your conservative, heavily regulated employer struggles to deploy represents a different risk profile than an aggressive tech company more attuned to labor costs.

Reading your results

The METR curve serves as the baseline for the forecasted capabilities of AI models. Then, we make assumptions about the time you spend in different task "buckets" (sorted by length they take to complete) based on your role and hierarchy level, and we add friction to the METR curve to essentially measure: how hard is it for AI to do these tasks of different lengths? That friction is measured by your responses to the questionnaire, but you can change the weights of these multipliers in the Model Tuning section.

We also make assumptions about industry-specific friction for your tasks, and how reliable AI needs to be in order to enter that risk curve. These are tunable in the sliders beneath the model, and you'll notice that moving these sliders can have a pronounced effect on your displacement timeline. These forces combine into a weighted readiness score (typically around 50%, adjusted by hierarchy) that opens the automation hazard. Implementation delay and compression parameters then shift that hazard into the green curve you see in your results.

When you complete the questionnaire, the model generates a chart showing two curves over time:

The blue curve shows technical feasibility (the automation hazard without implementation delay or compression). It turns on when AI clears your job's coverage threshold (typically ~50% of your task portfolio) based on your task mix. Digital, decomposable domains open the gate sooner; tacit/physical domains open later. Senior roles lift the threshold slightly and soften the ramp; entry-level roles lower it.

The green curve shows when you are likely to actually lose your job, accounting for real-world implementation barriers. This is the timeline that matters for planning your career. The green curve combines two displacement mechanisms:

- Delayed automation: The blue curve's timeline shifted forward by organizational friction.

- Workforce compression: An earlier pathway where AI does not replace you directly but amplifies senior workers who then absorb your tasks. Junior roles and standardized work face higher compression risk.

The vertical axis shows cumulative displacement probability. A green curve reaching 50% at year 4 means there is a 50% probability of displacement within 4 years, and 50% probability you remain employed beyond that point. Steep curves indicate displacement risk concentrates in a narrow window, while gradual curves spread risk over many years. Early divergence between curves signals high compression vulnerability.

Three examples

-

Alex: Compressed out in ~1-1.5 years.

Alex is a junior developer: writing code, fixing bugs, documenting changes. The work is fully digital and breaks into clean pieces. As AI tools improve, senior engineers absorb Alex's workload. They ship faster with AI assistance, and the backlog of junior-level tickets shrinks.

Alex is at the bottom level of all software engineers at his company, and eventually AI amplifies enough of his colleagues so that his contributions aren't worth his salary to his firm anymore.

-

Jordan: Protected for 7+ years.

Jordan is a management consultant with years of strong client relationships. His deliverables are technically digital (slides, memos, etc.) but he spends a large portion of his time in face-to-face meetings, and often has to derive his tacit knowledge about unique cases when advising clients. His clients are considering AI-driven displacements in their own firm, so they have unique challenges that were previously not considered in the consulting market. Each project needs a custom approach, and while Jordan uses AI tools to assist his planning, only he can be trusted to advise on broad change management. Compression risk is nearly zero, and Jordan's business will benefit from the AI displacement wave.

-

Sarah: Medium risk, 3-5 year timeline.

Sarah is a mid-level accountant, and her work involves processing invoices, reconciling statements, and preparing journal entries. The work is mostly digital and it's somewhat structured, but it requires human judgement: matching vendor names, deciding when to escalate a discrepancy, and calling coworkers for audit assistance. She handles "tickets" just like Alex, but they require more context to complete.

Uncertainty in the forecast

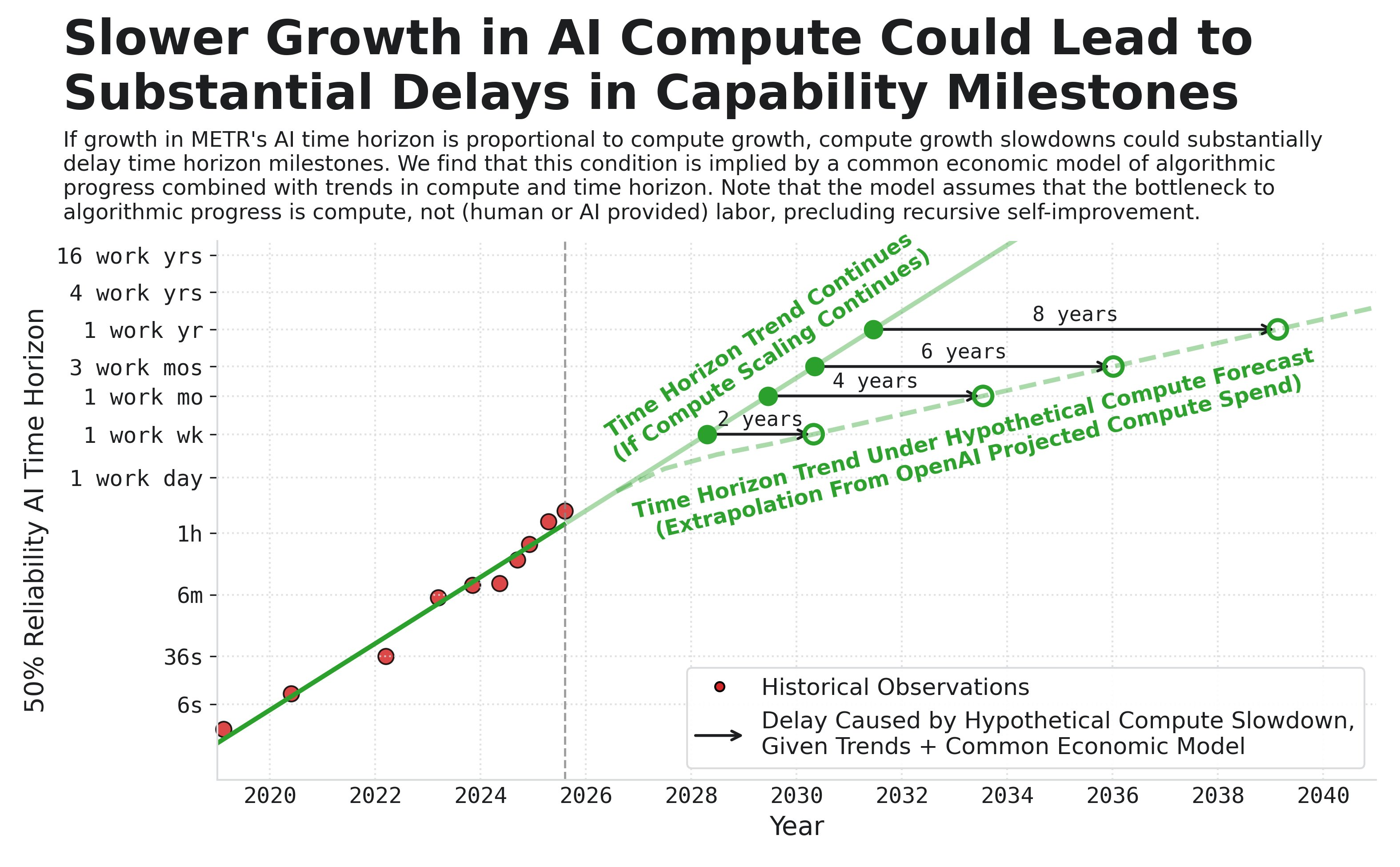

While these timelines may seem fast, the trendline for model capabilities is not certain to hold (which is why we allow you to tune it in the model). Current forecasts extrapolate from recent trends, but compute scaling may hit limits, algorithmic progress may slow, or AI may hit capability ceilings. In their paper "Forecasting AI Time Horizon Under Compute Slowdowns," METR researchers show that capability doubling rate is proportional to compute investment growth. If compute investment decelerates, key milestones could be delayed by years.

That said, even if growth slows, substantial capability growth has already occurred and will continue. For current workers, the question is whether a plateau happens before or after their jobs are affected. The historical 7-month doubling has held steady from 2019-2025, and more recent 2024-2025 data suggests the rate may be accelerating to roughly 4-month doubling.

What You Can Do About It

You cannot control AI capability growth, market competition, or how your industry responds. You do have some influence over where you sit in that process and how much time you have to adjust. Individual action will not fix AI displacement by itself, but it can buy you runway, options, and a better position from which to push for collective change.

Personal moves that may work

In the near term, there are some useful actions that can buy you time and flexibility.

Learn how your workflows complement AI. Understand which parts of your work AI already handles well, where you add value, and how you can structure tasks so that both strengths work together. People who can design and oversee AI-enabled workflows are more useful to their organizations and better prepared as roles shift.

Shift toward higher-context work where you can. Roles that involve judgment, coordination, and relationships are harder to automate than pure execution, especially in the short run. Moving part of your time toward context-heavy or integrative work can slow the impact on you, even if it does not remove it.

Increase the cost of removing you. Strong performance, reliability, and being central to coordination does not make you safe, but it creates organizational friction. When cuts happen, people who are trusted, visible, and hard to replace often receive more time, better options, or softer landings.

Explore other routes for agency. Skills that transfer across companies, a professional network, a record of public work, and some financial buffer all make it easier to adapt if your role changes quickly. These do not change the aggregate risk, but they change how exposed you are to it.

These are high-agency moves, but they mostly shift your place on the curve rather than changing the curve itself. They are worth making because they give you more control over your own landing and more capacity to engage with the bigger problem.

Policy integrations

If AI continues to compress and automate large parts of knowledge work, there will not be enough safe roles for everyone to move into. At that point, the question is less about how any one person adapts and more about how we share the gains and the risks: who owns the systems, who benefits from the productivity, and what happens to people whose roles are no longer needed.

How societies respond to AI-driven displacement will be shaped by policy choices actively being debated. Transition support programs (extended unemployment benefits, government-funded retraining, educational subsidies) face questions about whether retraining can work fast enough when target jobs are also changing rapidly. Human-in-the-loop mandates could require human involvement in high-stakes decisions regardless of AI capability, preserving employment by regulation. Automation taxes might slow adoption and fund transition support, while wage subsidies could make human labor more competitive. Universal basic income would decouple income from employment through regular payments funded by productivity gains. Broader ownership models might distribute AI capital through sovereign wealth funds or employee ownership requirements. And labor organizing could negotiate over automation pace, transition support, and profit-sharing.

Beyond these, societies will likely need to reckon with the nature of at-will employment, and redefine what "good performance" is at work. If we provide little comparative value to firms when AI reaches high levels of strength, our current economic models face little incentive to reward us with continued employment and new opportunities for labor. But we built AI, and our laborers provide the crucial data needed for pretraining, so I think there is a system we can develop that routes its success to people, rather than corporations that become increasingly mechanized.

Perhaps it's a democratized input model, where current laborers become rewarded with an ownership value of the models they help train. This will provide scaled returns for our existing workforce, especially as agents clone and expand within our organizations, and it follows the existing idea within capitalism of being rewarded for economically contributing. It doesn't solve for new grads who enter the workforce, and it needs some tinkering, but it may be a more tangible path beyond "we'll just distribute UBI derived from strong AI." UBI (or even the Universal Basic Compute idea that's been floating around) is a strong idea for a social safety net, but it likely will not be developed in time to catch people who face the early waves of unemployment.

You can engage by informing your representatives, supporting research organizations like Epoch, the Centre for the Governance of AI, and the Brookings Future of Work initiative, participating in professional associations, and contributing worker perspectives to public discourse.

A Note From Me

Thank you for reading and engaging with my work. Building this model took a lot of time, and translating a fast-moving field into something that feels clear, useable, and tunable was harder than I expected. I hope it helped you understand the dynamics behind your results and gave you a better sense of what the next few years might look like.

This project is completely unrelated to my main job, but I will continue to evolve it as this technology does. I believe AI is one of the most significant dangers to our society in a long time, and job loss is only one of the many issues we face from unchecked/unregulated growth. We have to continue developing tools to defensively accelerate the pace of change.

If you'd like to contact me, please see my socials at the bottom of the page.

Clay